Achieving Data Excellence: How Generative AI Revolutionizes Data Integration

Data and generative AI services that provide data analysis, ETL, and NLP enable robust integration strategies for unlocking the full potential of data assets.

Join the DZone community and get the full member experience.

Join For FreeEditor's Note: The following is an article written for and published in DZone's 2024 Trend Report, Enterprise AI: The Emerging Landscape of Knowledge Engineering.

In today's digital age, data has become the cornerstone of decision-making across various domains, from business and healthcare to education and government. The ability to collect, analyze, and derive insights from data has transformed how organizations operate, offering unprecedented opportunities for innovation, efficiency, and growth.

What Is a Data-Driven Approach?

A data-driven approach is a methodology that relies on data analysis and interpretation to guide decision-making and strategy development. This approach encompasses a range of techniques, including data collection, storage, analysis, visualization, and interpretation, all aimed at harnessing the power of data to drive organizational success.

Key principles include:

- Data collection – Gathering relevant data from diverse sources is foundational to ensuring its quality and relevance for subsequent analysis.

- Data analysis – Processing and analyzing collected data using statistical and machine learning (ML) techniques reveal valuable insights for informed decision-making.

- Data visualization – Representing insights visually through charts and graphs facilitates understanding and aids decision-makers in recognizing trends and patterns.

- Data-driven decision-making – Integrating data insights into decision-making processes across all levels of an organization enhances risk management and process optimization.

- Continuous improvement – Embracing a culture of ongoing data collection, analysis, and action fosters innovation and adaptation to changing environments.

Data Integration Strategies Using AI

Data integration combines data from various sources for a unified view. Artificial intelligence (AI) improves integration by automating tasks, boosting accuracy, and managing diverse data volumes. Here are the top four data integration strategies/patterns using AI:

- Automated data matching and merging – AI algorithms, such as ML and natural language processing (NLP), can match and automatically merge data from disparate sources.

- Real-time data integration – AI technologies, such as stream processing and event-driven architectures, can facilitate real-time data integration by continuously ingesting, processing, and integrating data as it becomes available.

- Schema mapping and transformation – AI-driven tools can automate the process of mapping and transforming data schemas from different formats or structures. This includes converting data between relational databases, NoSQL databases, and other data formats — plus handling schema evolution over time.

- Knowledge graphs and graph-based integration – AI can build and query knowledge graphs representing relationships between entities and concepts. Knowledge graphs enable flexible and semantic-driven data integration by capturing rich contextual information and supporting complex queries across heterogeneous data sources.

Data integration is the backbone of modern data management strategies, which are pivotal in providing organizations with a comprehensive understanding of their data landscape. Data integration ensures a cohesive and unified view of organizational data assets by seamlessly combining data from disparate sources, such as databases, applications, and systems.

One of the primary benefits of data integration is its ability to enhance data quality. By consolidating data from multiple sources, organizations can identify and rectify inconsistencies, errors, and redundancies, thus improving their data's accuracy and reliability. This, in turn, empowers decision-makers to make informed choices based on trustworthy information. Let's look closely at how we can utilize generative AI for data-related processes.

Exploring the Impact of Generative AI on Data-Related Processes

Generative AI has revolutionized various industries and data-related processes in recent years. Generative AI encompasses a wide array of methodologies, spanning generative adversarial networks (GANs) and variational autoencoders (VAEs) to transformer-based models such as GPT (generative pre-trained transformer). These algorithms showcase impressive abilities in producing lifelike images, text, audio, and even videos, which closely emulate human creativity through generating fresh data samples.

Using Generative AI for Enhanced Data Integration

Now, we've come to the practical part of the role of generative AI in enhanced data integration. Below, I've provided some real-world scenarios. This will bring more clarity to AI's role in data integration.

Table 1. Real-world use cases

| Industry/Application | Example |

|---|---|

Healthcare/image recognition |

|

E-commerce |

|

Social media |

|

Cybersecurity |

|

Financial services |

|

Ensuring Data Accuracy and Consistency Using AI and ML

Organizations struggle to maintain accurate and reliable data in today's data-driven world. AI and ML help detect anomalies, identify errors, and automate cleaning processes. Let's look into those patterns a bit closer.

Validation and Data Cleansing

Data validation and cleansing are often laborious tasks, requiring significant time and resources. AI-powered tools streamline and speed up these processes. ML algorithms learn from past data to automatically identify and fix common quality issues. They can standardize formats, fill in missing values, and reconcile inconsistencies. Automating these tasks reduces errors and speeds up data preparation.

Uncovering Patterns and Insights

AI and ML algorithms can uncover hidden patterns, trends, and correlations within datasets. By analyzing vast amounts of data, these algorithms can identify relationships that may not be apparent to human analysts. AI and ML can also understand the underlying causes of data quality issues and develop strategies to address them. For example, ML algorithms can identify common errors or patterns contributing to data inconsistencies. Organizations can then implement new processes to improve data collection, enhance data entry guidelines, or identify employee training needs.

Anomalies in Data

AI and ML algorithms reveal hidden patterns, trends, and correlations in datasets, analyzing vast amounts of data to uncover insights not readily apparent to humans. They also understand the root causes of data quality issues, identifying common errors or patterns causing inconsistencies. This enables organizations to implement new processes, such as refining data collection methods or enhancing employee training, to address these issues.

Detecting Anomalies in Data

ML models excel at detecting patterns, including deviations from norms. With ML, organizations can analyze large volumes of data, compare them against established patterns, and flag potential issues. Organizations can then identify anomalies and determine how to correct, update, or augment their data to ensure its integrity.

Let's have a look at services that can validate data and detect anomalies.

Detecting Anomalies Using Stream Analytics

Azure Stream Analytics, AWS Kinesis, and Google Cloud Dataflow are examples of tools that provide built-in anomaly detection capabilities, both in the cloud and at the edge, enabling vendor-neutral solutions. These platforms offer various functions and operators for anomaly detection, allowing users to monitor anomalies, including temporary and persistent ones.

For example, based on my experience building validation using Stream Analytics, here are several key actions to consider following:

- The model's accuracy improves with more data in the sliding window, treating it as expected within the timeframe. It focuses on event history in the window to spot anomalies, discarding old values as it moves.

- Functions establish a baseline normal by comparing past data and identifying outliers within a confidence level. Set the window size based on the minimum events needed for practical training.

- Response time increases with history size, so include only necessary events for better performance.

- Based on ML, you can monitor temporary anomalies like spikes and dips in a time series event stream using the AnomalyDetection_SpikeAndDip operator.

- If a second spike within the same sliding window is smaller than the first, its score might not be significant enough compared to the first spike within the specified confidence level. To address this, consider adjusting the model's confidence level. However, if you receive too many alerts, use a higher confidence interval.

Leveraging Generative AI for Data Transformation and Augmentation

Generative AI helps with data augmentation and transformation, which are also part of the data validation process. Generative models can generate synthetic data that resembles actual data samples. This can be particularly useful when the available dataset is small or needs more diversity. Generative models can also be trained to translate data from one domain to another, or to transform data while preserving its underlying characteristics.

For example, sequence-to-sequence models like transformers can be used in NLP for tasks such as language translation or text summarization, effectively transforming the input data into a different representation. Also, the data transformation process can be used to solve problems in legacy systems based on an old codebase. Organizations can unlock numerous benefits by transitioning to modern programming languages. For instance, legacy systems are built on outdated programming languages such as Cobol, Lisp, and Fortran. To modernize and enhance their performance, we must migrate or rewrite them using the latest high-performance and sophisticated programming languages like Python, C#, or Go.

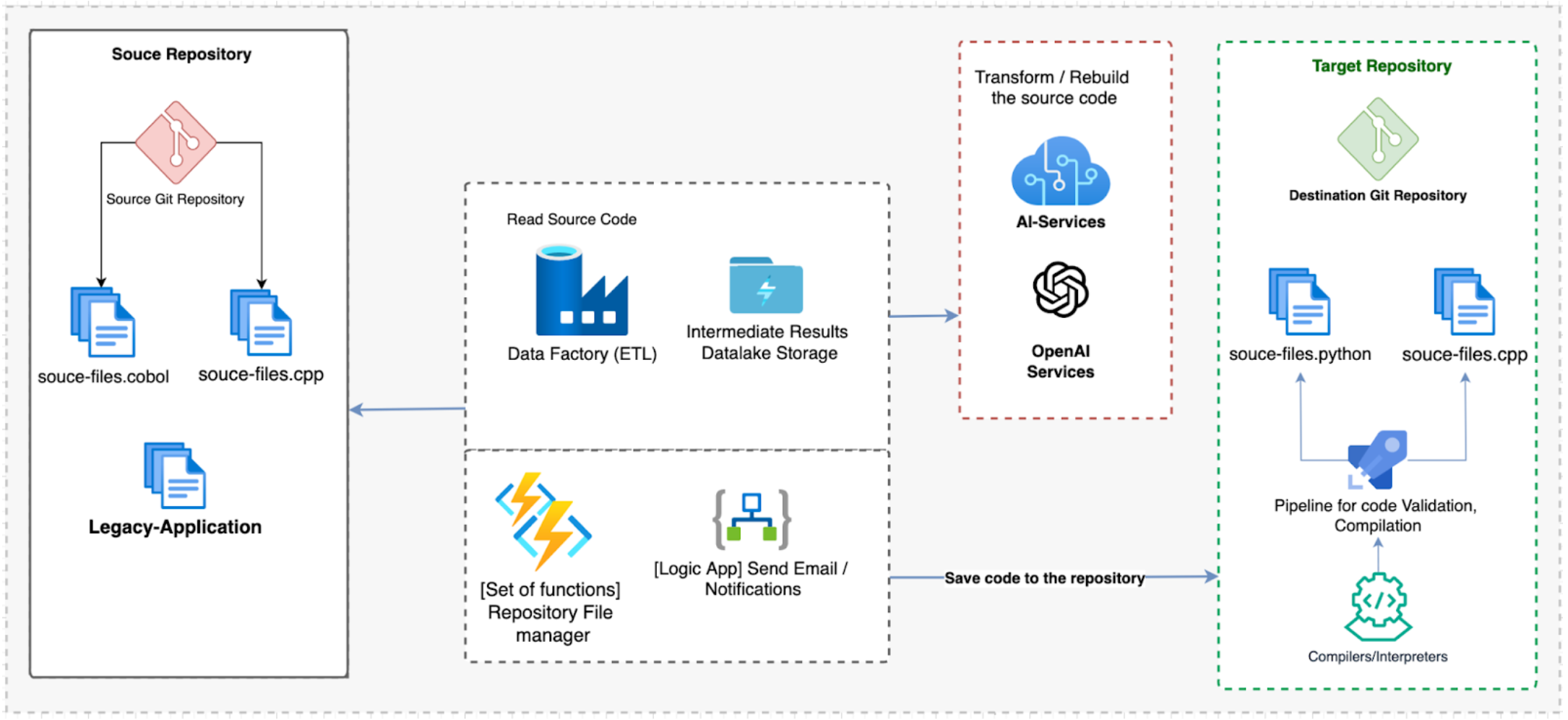

Let's look at the diagram below to see how generative AI can be used to facilitate this migration process:

Figure 1. Using generative AI to rewrite legacy code

The architecture above is based on the following components and workflow:

- Azure Data Factory is the main ETL (extract, transform, load) for data orchestration and transformation. It connects to the source repo Git repositories. Alternatively, we can use AWS Glue for data integration and Google Cloud Data Fusion for ETL data operation.

- OpenAI is the generative AI service used to transform Cobol and C++ to Python, C#, and Golang (or any other language). The OpenAI service is connected to Data Factory. Alternatives to OpenAI are Amazon SageMaker or Google Cloud AI Platform.

- Azure Logic Apps and Google Cloud Functions are utility services that provide data mapping and file management capabilities.

- DevOps CI/CD provides pipelines to validate, compile, and interpret generated code.

Data Validation and AI: Chatbot Call Center Use Case

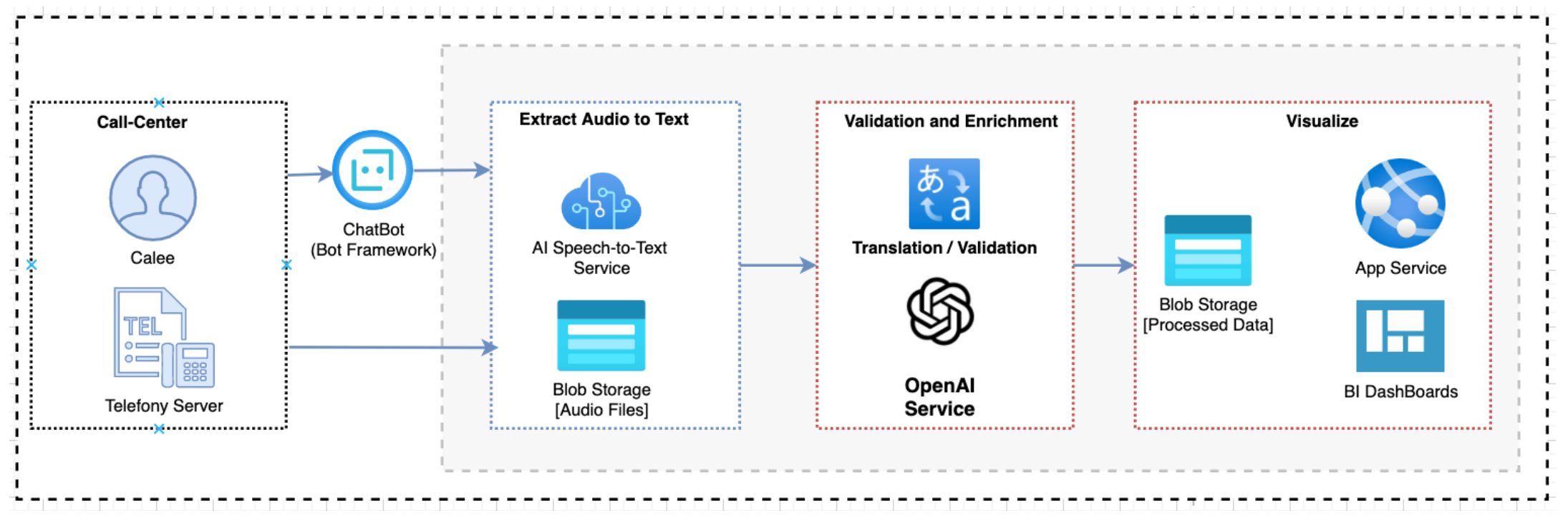

An automated call center setup is a great use case to demonstrate data validation. The following example provides an automation and database solution for call centers:

Figure 2. Call center chatbot architecture

The automation and database solution extracts data from the speech bot deployed in call centers or from interactions with real people. It then stores, analyzes, and validates this data using OpenAI's ChatGPT and an AI sentiment analysis service. Subsequently, the analyzed data is visualized using business intelligence (BI) dashboards for comprehensive insights. The processed information is also integrated into the customer relationship management (CRM) systems for human validation and further action.

The solution ensures accurate understanding and interpretation of customer interactions by leveraging ChatGPT, an advanced NLP model. Using BI dashboards offers intuitive and interactive data visualization capabilities, allowing stakeholders to gain actionable insights at a glance. Integrating the analyzed data into CRM systems enables seamless collaboration between automated analysis and human validation.

Conclusion

In the ever-evolving landscape of enterprise AI, achieving data excellence is crucial. Data and generative AI services that provide data analysis, ETL, and NLP enable robust integration strategies for unlocking the full potential of data assets. By combining data-driven approaches and advanced technologies, businesses can pave the way for enhanced decision-making, productivity, and innovation through these AI and data services.

This is an excerpt from DZone's 2024 Trend Report,

Enterprise AI: The Emerging Landscape of Knowledge Engineering.

Read the Free Report

Opinions expressed by DZone contributors are their own.

Comments