CRM Analytics Data Flow and Recipe, Ultimate Guide to Data Transformation

Cloud-based Business Intelligence (BI) and visualization framework known for Salesforce data integration with external sources and predictive analytics.

Join the DZone community and get the full member experience.

Join For FreeSalesforce CRM Analytics is a cloud-based Business Intelligence (BI) and visualization framework seamlessly integrated into the Salesforce platform, designed to enable business insights, predictive analytics, and recommendations by integrating the Salesforce data with external big data sources.

CRM Dataflows and recipes are effective Data Transformation tools providing extensive ETL (Extract, Transform, and Load) capabilities. Dataflows can be used to Extract Data from Salesforce Local objects or External Big Data sources, Transform the Input data by performing data operations like Filter, Modify, Group, Cleanse, Append, Slice, and Transform, and Load the transformed Data into the datasets and make it available for deriving the Business Insights in the CRM Analytics Dashboards and Lens.

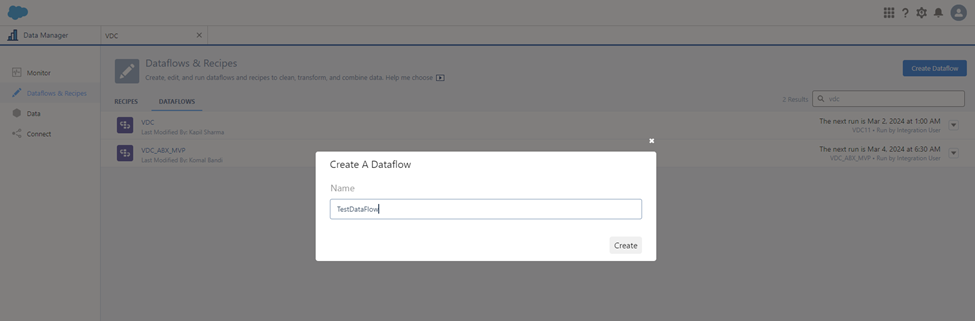

How To Create a Dataflow

CRM Analytics provides a quick and easy navigation for creating a new Dataflow.

- Login to Salesforce Data Manager.

- Click on Dataflows and Recipes > Create Dataflow

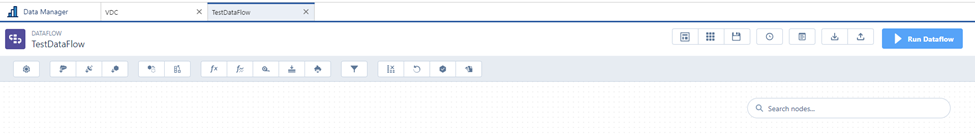

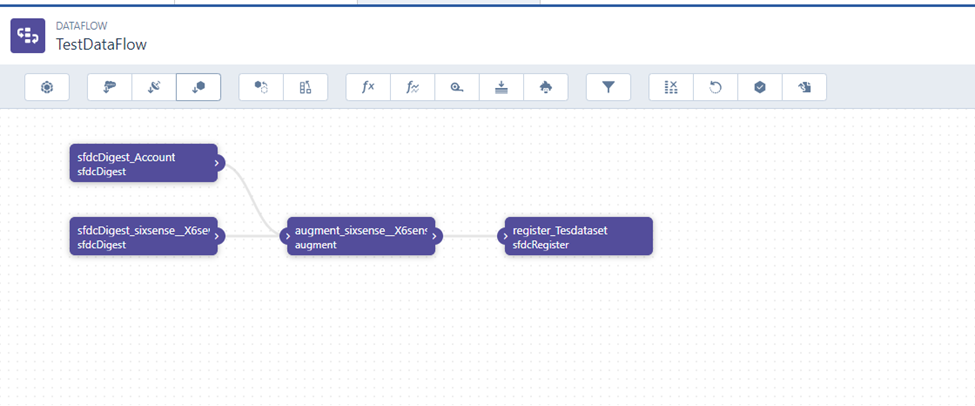

This will take you to Dataflow builder and now you ready for your Data transformation journey.

This will take you to Dataflow builder and now you ready for your Data transformation journey.

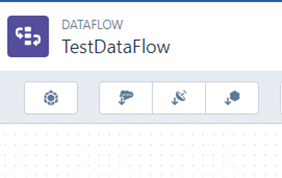

Extract/Data Ingestion

There are four Data Extract nodes available in CRM Analytics, DatasetBuiler, sfdcDigest, digest and edgemart.

DatasetBuiler

DatasetBuilder lets create a new dataset by joining multiple local Saleforce objects. Following are quick steps to create a dataset.

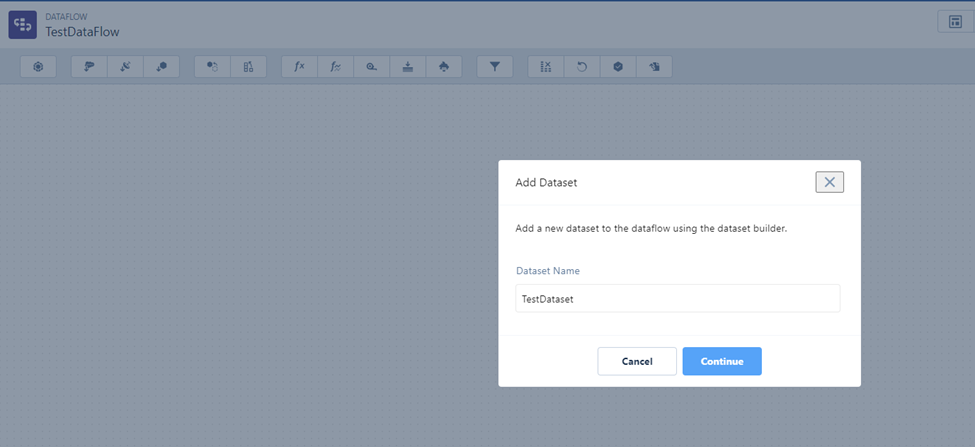

- Click on DatasetBuilder, specify the name of Dataset, and click on Continue.

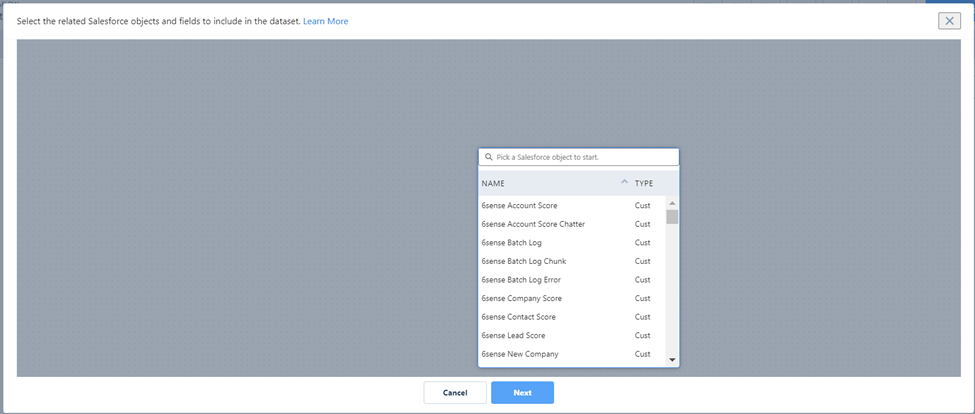

- Select the first Local Salesforce Object to be added as a Dataset in the dataflow.

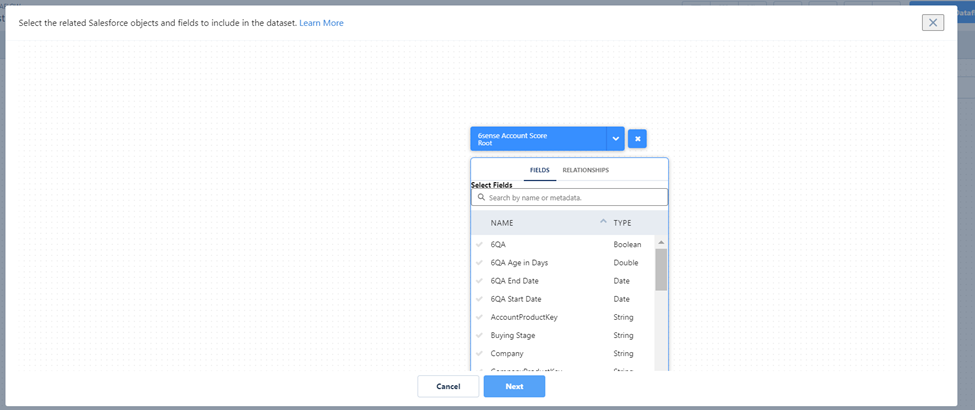

- Select the fields from the object identified from above.

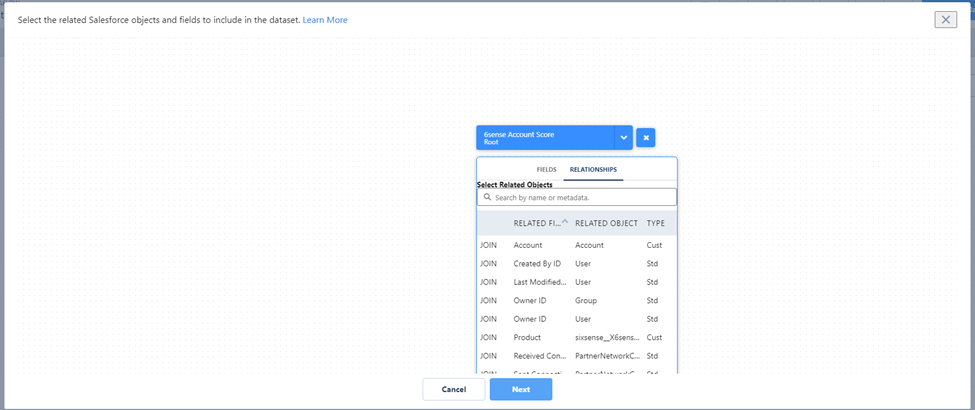

- Click on Relationships to create multi object dataset by joining two objects.

- This will create multiple nodes in the dataflow to mimic the flow of input data from two SFDC local objects, joining them together based on the relationship columns and exporting the data into another dataset

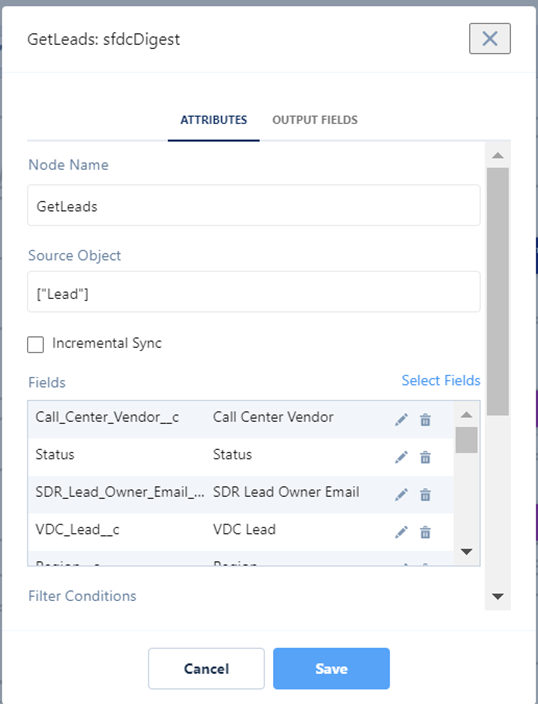

sfdcDigest

sfdcDigest can be used to extract data from a Salesforce Data Sync. This node lets you narrow down the data by specifying the fields to be selected and filtering conditions. This node also allows you to choose the data sync type, Full Sync, or Incremental Sync. Filter criteria can be specified in the SAQL statement.

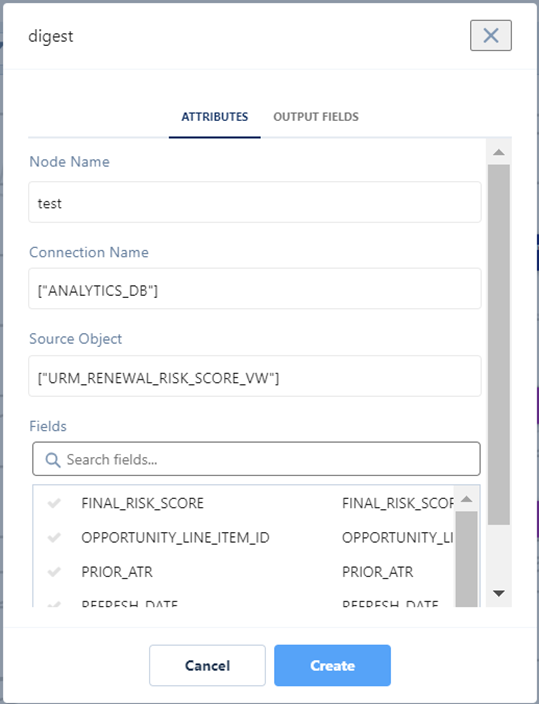

Digest

The digest transformation extracts synced connected data in a dataflow. Use it to extract data synced from an external Salesforce org or data synced through an external connection. Use the sfdcDigest transformation to extract from your local Salesforce org.

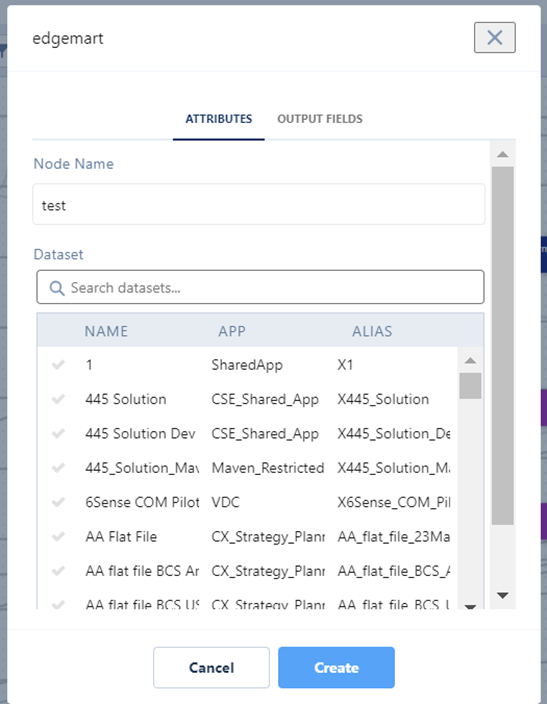

Edgemart

The edgemart Transformation gives the dataflow access to an existing, registered dataset, which can contain Salesforce data, external data, or a combination of the two. Use this transformation to reference a dataset so that its data can be used in subsequent transformations in the data flow. You can use this transformation and the augment transformation together to join an existing dataset with a new dataset.

Data Integration

CRM Analytics allows to integrate the multiple stream of data objects by either appending multiple inputs with each other using append transformation or let the developers enhance the input data stream by joining with other input based on certain key columns using augment transformation.

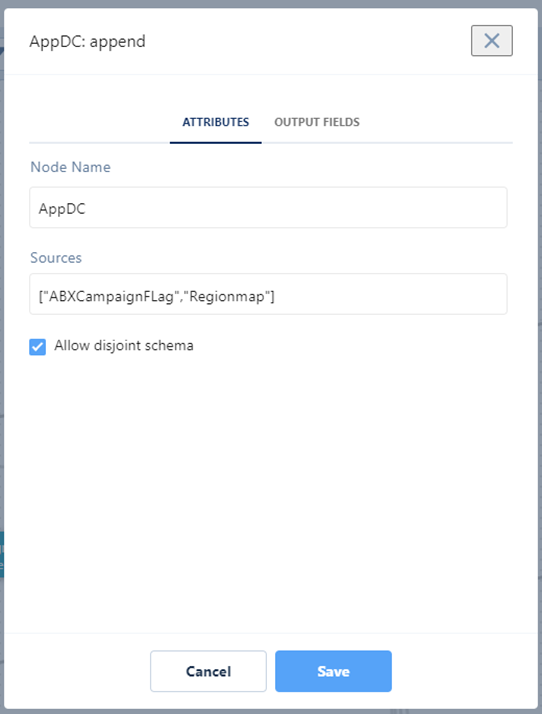

Append

Append transformation helps stacking rows from multiple sets of input into each other. For example, of you can append rows from multiple REGION into one stream to create a global dataset. Append also allows to work on disjointed datasets, not having same columns in these input streams.

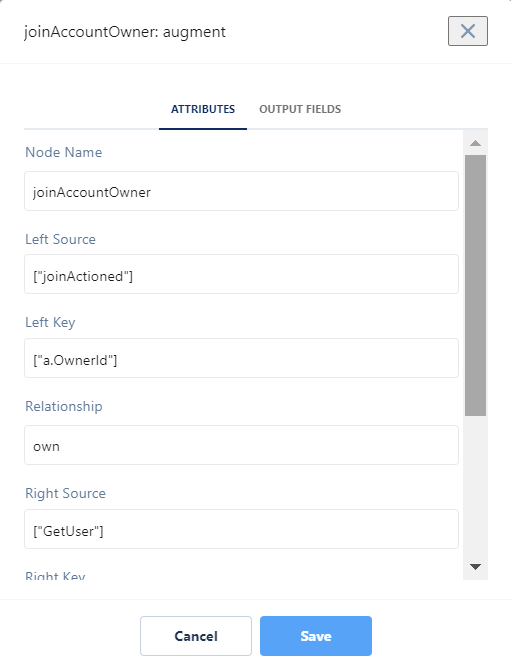

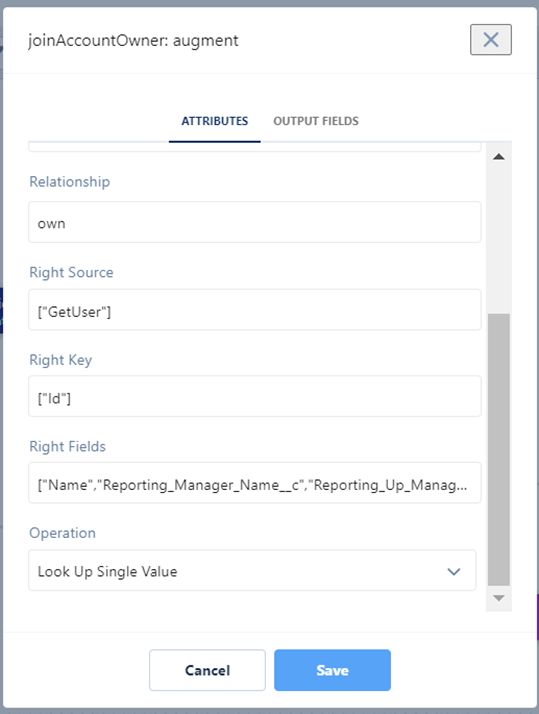

Augment

Augment transformation joins input data objects and add the columns from Right Input data objects into Left Data Object based on the joner columns.Every record in left input is looked up in the right input based the key columns and columns for the first matching record from right input is added to the left input.

Augment transformation also allows the creation of multi valued attributes, if left input column values match with multiple records from right, all the matching values added to the multi valued attribute, this is achieved using the “Look up Multiple Values” in the “Operation” parameter.

Following configuration helps joining the Account object with the user object to add the account owner details like, owner name, email and manager.

Data Transformation

CRM Analytics allows to update the input streams using multiple transformations like computeExpression, computeRelative, dim2mea, flatten and prediction.

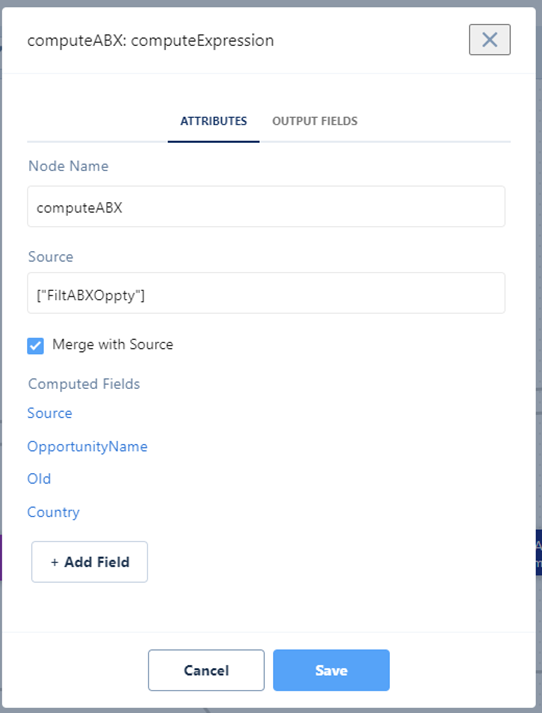

computeExpression

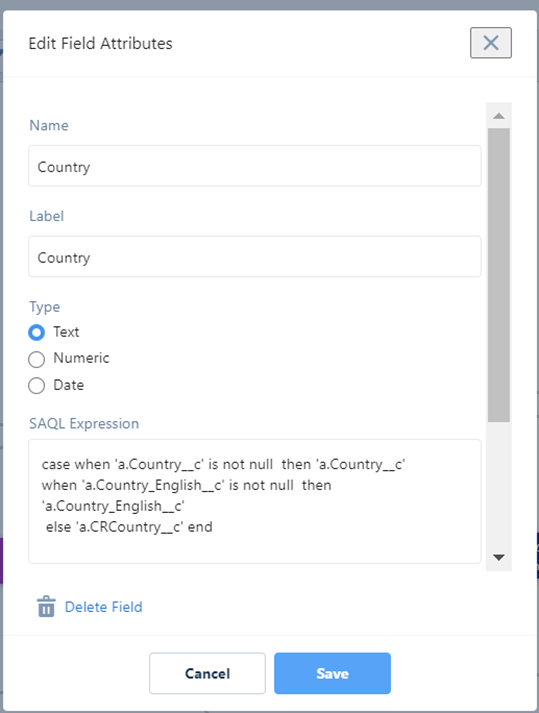

The computeExpression transformation allows to add calculated or derived fields to a dataset. Values for these calculated fields are generated using a SAQL expression, which can be based on one or more fields from the input data or other derived fields. For example, you can use an expression to convert the opportunity value from one currency into another, or you can change the date format of a date or parse input text value.

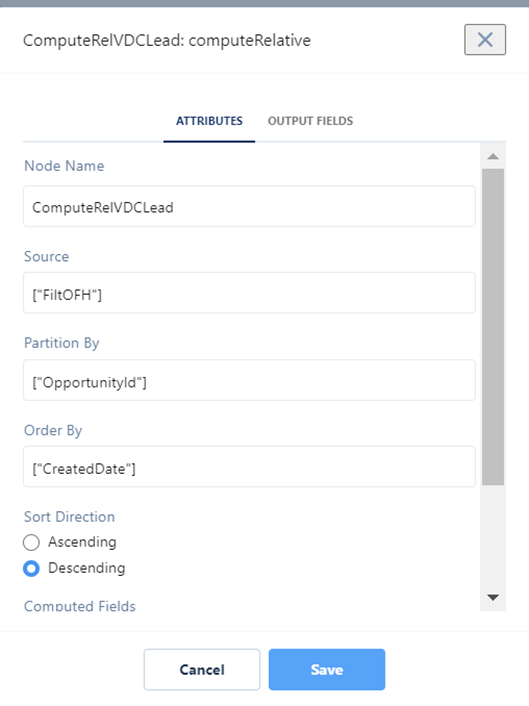

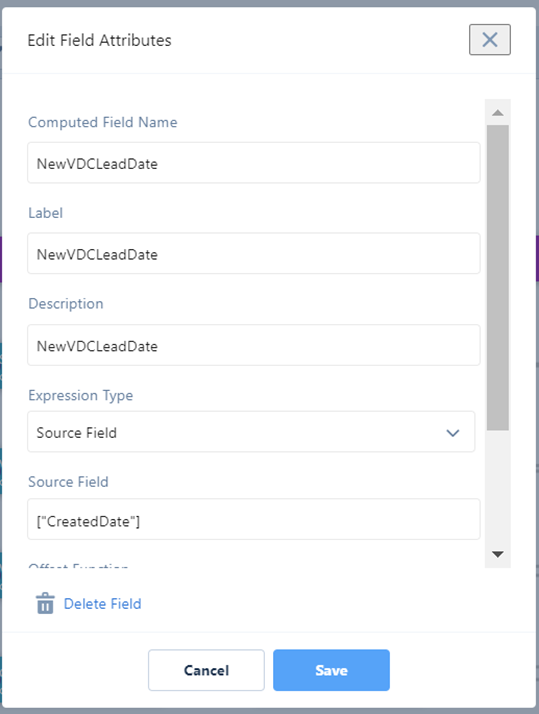

computeRelative

computeRelative transformation can be used to analyze trends in ata by adding calculated fields to a dataset based on values in other rows. For example, to analyze sales pipeline trends, create derived fields that calculate the number of days an opportunity remains in each stage. You can also calculate the changes to the opportunity amount throughout the stages of the opportunity.

dim2mea

The dim2mea Transformation creates a new measure based on a dimension. The transformation adds the new measure column to the dataset. The transformation also preserves the dimension to ensure that lenses and dashboards don’t break if they use the dimension.

During the column type conversion, the Dimension to Measure transformation rounds decimals to the nearest whole number. For example, 300.2939 becomes 300.

Filter

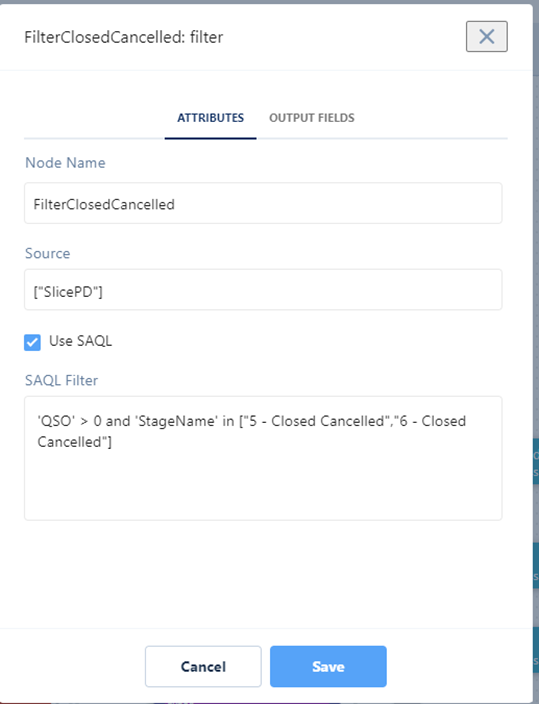

Filter transformation allows to narrow down the input stream to the select few records, you can specify the SAQL query to keep specific records.

For example, below configuration selects all the opportunities which have been closed cancelled.

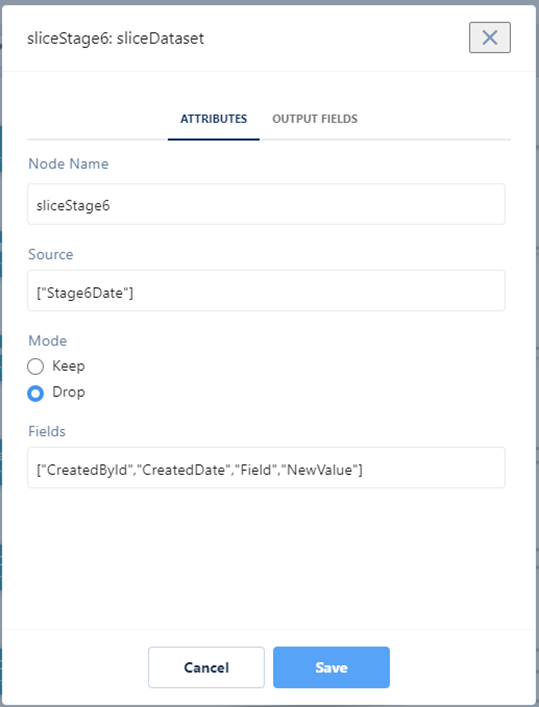

sliceDataset

sliceDataset transformation allows to select or drop the specific columns to narrow down the input stream, you can specify the columns which needs to be selected or dropped from the input stream.

For example, below configuration drops few columns from the input stream.

Data Load

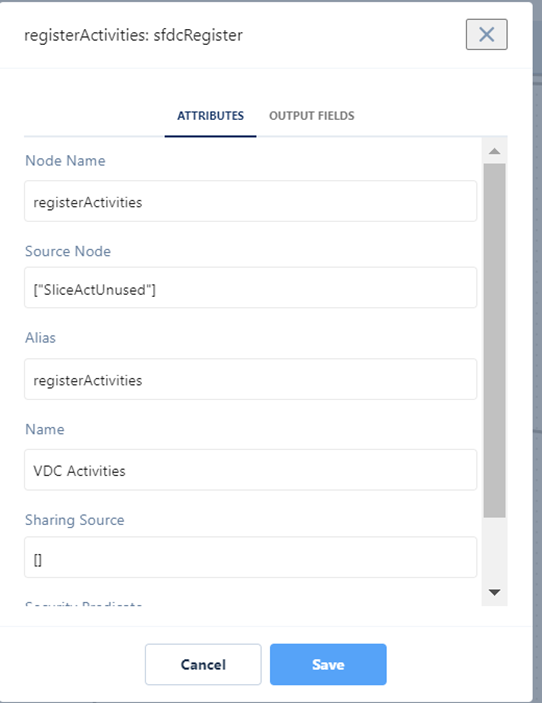

CRM Analytics allows to create and refresh the datasets using sfdcRegister and export transformations. These datasets can be used to query and design dashboards for further analysis.

sfdcRegister

The sfdcRegister transformation helps create or refresh a dataset with all the derived and ingested columns, each time a data is executed the sfdcRegister would overwrite the current dataset.

Conclusion

In the end, CRM analytics provides an exhaustive list of ETL(Extract Transform and Load) capabilities. These features comes really handy creating datasets for Business Insights specially in the field of Sales and Marketing. The seamless integration between Salesforce and external big data applications like AWS Redshift, Microsoft Azure, Google Analytics, SAP HANA, Snowflake, Microsoft Dynamics, Mulesoft, and Oracle makes this tool a market leader in the sector. With a thriving developer community and extensive ecosystem, CRM Analytics remains at the forefront, enabling innovative business insights in these dynamic domains.

Opinions expressed by DZone contributors are their own.

Comments