Engineering Trustworthy AI: A Deep Dive Into Security and Safety for Software Developers

Building truly intelligent systems isn't enough; we must prioritize security and safety to ensure these innovations benefit humanity without causing harm.

Join the DZone community and get the full member experience.

Join For FreeThe AI revolution has moved beyond theoretical discussions and into the hands of software developers like ourselves. We're entrusted with building the AI-powered systems that will shape the future, but with this power comes a significant responsibility: ensuring these systems are secure, reliable, and worthy of trust. Let's delve into the technical strategies and best practices for engineering trustworthy AI, going beyond buzzwords to explore practical solutions.

Fortifying the AI Fortress: Technical Strategies for Security

Data is the lifeblood of AI, but it's also a prime target for malicious actors. Insecure data can lead to biased models, compromised privacy, and even catastrophic failures. The interconnected nature of our digital world necessitates a robust approach to AI security. We need to build defenses against various threats, including:

Adversarial Attacks

These attacks exploit vulnerabilities in AI models to induce incorrect outputs. To mitigate this, explore techniques like:

- Adversarial training: Incorporate adversarial examples into the training process to improve model resilience. Explore methods like the Fast Gradient Sign Method (FGSM), Projected Gradient Descent (PGD), or Carlini & Wagner (C&W) attack for generating strong adversarial examples.

- Defensive distillation: Train a secondary model on the outputs of the primary model to smooth the decision boundaries and reduce susceptibility to adversarial perturbations.

- Input preprocessing and feature squeezing: Apply techniques like image compression or dimensionality reduction to reduce the effectiveness of adversarial perturbations.

Data Poisoning

Protecting the integrity of training data is crucial.

- Data provenance and versioning: Track the origin and modifications of data throughout its lifecycle. Utilize tools like Git LFS or DVC for data version control.

- Anomaly detection: Implement real-time anomaly detection systems to identify and flag potentially poisoned data points. Consider using isolation forests or autoencoders for anomaly detection.

Model Theft

Protect your intellectual property with:

- Model obfuscation: Implement techniques like code obfuscation or model encryption to make it difficult to reverse engineer the model architecture and parameters.

- Access control and authentication: Utilize robust access control mechanisms like role-based access control (RBAC) and strong authentication methods like multi-factor authentication (MFA) to prevent unauthorized access.

- Homomorphic encryption: Explore homomorphic encryption techniques to allow computations on encrypted data without decryption, protecting model parameters even during inference.

Privacy Breaches

Safeguard sensitive data with:

- Differential privacy: Implement differential privacy techniques like the Laplace mechanism or Gaussian mechanism to add noise while preserving data utility for training.

- Federated learning: Explore federated learning frameworks like TensorFlow Federated or PySyft to train models on decentralized data without sharing raw data.

- Secure multi-party computation (MPC): Consider MPC protocols to enable collaborative training on sensitive data without revealing individual data points.

Building Safety Nets: Mitigating Unintended Consequences

Building truly safe AI involves addressing potential biases, ensuring transparency, and mitigating unintended consequences. AI systems, especially those based on deep learning models, can exhibit complex and sometimes unpredictable behaviors. The following are some key approaches where AI risks can be mitigated:

Bias Mitigation

- Pre-processing techniques: Analyze and address biases in training data through techniques like re-weighting, sampling, or data augmentation.

- In-processing techniques: Explore algorithms like adversarial debiasing or prejudice remover regularizers to mitigate bias during model training.

- Post-processing techniques: Adjust model predictions to ensure fairness, using techniques like reject option classification or calibrated equalized odds.

Explainable AI (XAI)

- Local Interpretable Model-Agnostic Explanations (LIME): Explain individual predictions by approximating the model locally with an interpretable model.

- SHapley Additive exPlanations (SHAP): Attribute feature importance to each input feature based on Shapley values from game theory.

- Integrated gradients: Attribute the prediction to input features by accumulating gradients along a path from a baseline input to the actual input.

Monitoring and Logging

- Performance monitoring: Continuously monitor key performance metrics like accuracy, precision, recall, and F1 score to detect degradation or unexpected behavior.

- Explainability monitoring: Monitor the explanations generated by XAI techniques to identify potential biases or fairness issues.

- Data drift detection: Implement data drift detection mechanisms to identify changes in the data distribution that might affect model performance.

Human-in-the-Loop

- Active learning: Utilize active learning strategies to allow human experts to selectively label data points that are most informative for the model, improving model accuracy and reducing biases.

- Human-AI collaboration: Design systems where humans and AI collaborate to make decisions, leveraging the strengths of both.

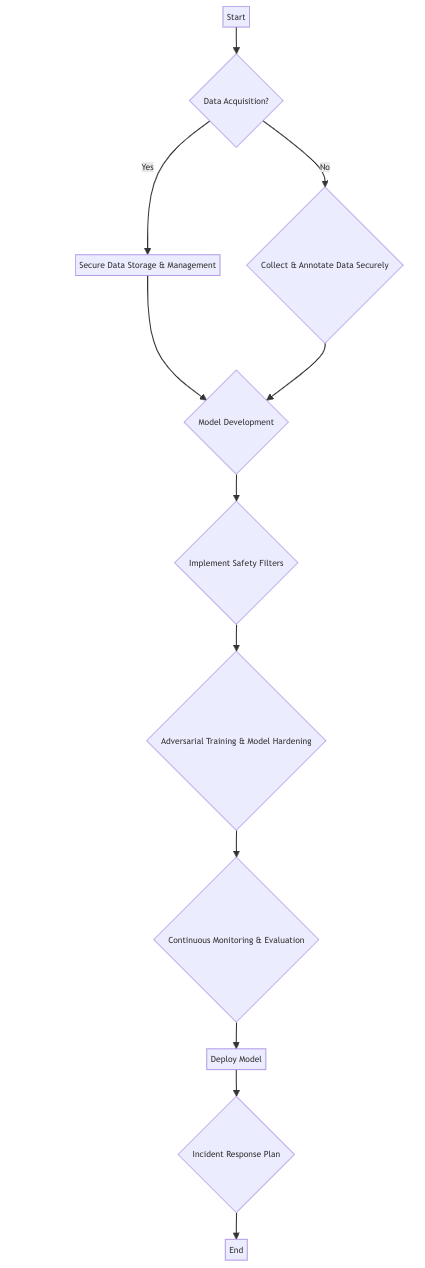

This flowchart provides a high-level overview of the steps involved in implementing AI safety measures. The specific techniques and tools used will vary depending on the application and context.

A Collaborative Effort for a Trustworthy AI Future

Building secure and safe AI requires collaboration between developers, researchers, policymakers, and end-users. By adopting these technical strategies, fostering a security-first mindset, and upholding ethical principles, we can harness the transformative power of AI while minimizing its risks. This collaborative effort will pave the way for a future where AI technologies are not only innovative but also trustworthy and beneficial for humanity.

Opinions expressed by DZone contributors are their own.

Comments