Explainability of Machine Learning Models: Increasing Trust and Understanding in AI Systems

To make ethical and trustworthy use of AI, researchers must continue creating methodologies that balance model complexity and ease of interpretation.

Join the DZone community and get the full member experience.

Join For FreeThere have been major advances made in the use of machine learning models in a variety of domains, including natural language processing, generative AI, and autonomous systems, to name just a few. On the other hand, as the complexity and scale of models increase, the visibility of how they work inside decreases, which results in a lack of transparency and accountability. The idea of a model's ability to be explained has recently come to the forefront as an important field of research to address this problem. This article explores the significance of machine learning model explainability, as well as the difficulties associated with it and the solutions that have been devised to improve interpretability. Explainability, which enables model openness and insights that humans can understand, not only builds trust in AI systems but also fosters the responsible adoption of AI in applications that are used in the real world.

Concerns regarding the lack of interpretability in the decision-making processes of artificial intelligence (AI) and machine learning (ML) models have been raised due to the increasing integration of these models in various domains. The ability of artificial intelligence systems to provide insights into their predictions or classifications that are comprehensible to humans is referred to as model explainability. This article explores the significance of explainability in models, its applicability to various fields, and the influence that interpretability has on the reliability of artificial intelligence.

Why Model Explainability

- Ethical Significance: Models must be able to be explained in order to guarantee the deployment of ethical AI. It helps identify biases and prejudicial trends in the data, both of which could have a negative impact on the decision-making process.

- Accountability and Increased Trust: The decisions made by AI systems can have far-reaching implications in various fields, including healthcare, finance, and autonomous driving. Users and other stakeholders are more likely to have faith in explainable models because these models make the decision-making process more visible and understandable. This ultimately results in increased accountability for the decisions made by AI systems.

Model Explainability Challenges

- Performance and Explainability Trade-Off: There is often an imbalance between how well a model performs and how well it can explain its results. High levels of interpretability may come at the expense of accurate prediction, whereas high levels of accuracy may make it challenging to understand the models.

- Model Complexity: Complex model designs with millions of parameters are a hallmark of modern AI models, especially deep neural networks. It's an immense challenge to try to figure out how these models make decisions.

Model Explainability Techniques

- Interpretable friendly models: There are models that naturally lend themselves to interpretation, such as decision trees and linear regression. When it comes to applications in which transparency is of the utmost importance, these models are usually the preferred ones.

- Rule-based explainability: Systems typically employ if-then rules as a means of providing an explanation for the decisions made by the model. These principles explain, in language that a person can comprehend, how the model arrives at its conclusions and predictions.

- Visualization-aided explainability: Users are aided in their comprehension of how various aspects of an input contribute to the model's output by the application of visualization techniques such as activation maps and saliency maps. Tasks involving image recognition benefit enormously from the application of these techniques. For example, in Alzheimer's brain MRI classification using deep learning networks, where one aims at predicting whether the subject has AD or not, it is helpful to have a saliency map to strengthen the model's performance claims. As we see in Figure 1, where we have a saliency map comprised of 4 brain MRI scans where AD was predicted correctly, we can see that a particular region is much more prominent, thereby demonstrating confidence in the model being able to correctly detect regions impacted by AD and predicting them.

![Figure 1: Brain MRI saliency map]()

- Feature Importance: A relevance score is assigned to each individual input feature via feature importance approaches such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations). Using these methods, one can gain an understanding of which characteristics contribute to a certain forecast the most.

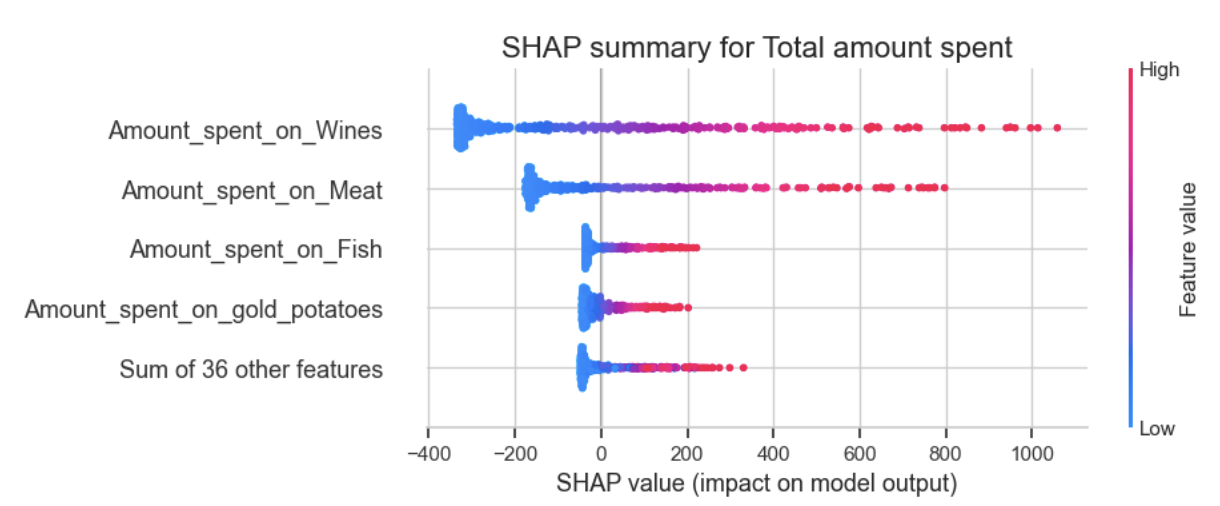

Let's take a look at how SHAP can help us explain a simple linear regression model. For this experiment, I used a marketing dataset, where I built a linear regression model to predict the total amount spent on shopping based on other independent variables. Then I used the SHAP library on the trained model and data to identify features or variables that had the maximum impact on the model.

# calculate SHAP values

import shap

explainer = shap.Explainer(model, X_train) ## model is the fit linear regression model, X_train is the training data

shap_values = explainer(X_test) ## X_test is the testing data

# plot

plt.title('SHAP summary for Total amount spent', size=16)

shap.plots.beeswarm(shap_values, max_display=5)

From the output shown in Figure 2, we can see the top five features that help in determining the total amount spent in the linear regression model. We can see that the maximum amount spent comes from wines, followed by meat and fish.

Model Explainability Impact

- In the context of the financial industry, transparent models provide regulators, clients, and financial institutions with the opportunity to comprehend the reasons behind the approval or denial of certain credit card or mortgage applications. This helps to ensure both fairness and accountability.

- The explainability of models is essential in the field of medical diagnostics if one wants to establish the confidence of healthcare professionals. Models that can be interpreted can offer explicit reasons for the diagnoses they produce, which can lead to more assured decision-making. For example, a lot of research is being done in the field of early disease classification using neuroimaging data. Explainable models would highly increase confidence in such predictions and help aid medical professionals with early diagnosis of diseases.

- Currently, a lot of research and work is being done on enabling fully self-driven cars not only for enterprise solutions but for individual use as well. The explainability of such machine learning models is of the utmost importance for the rollout of autonomous vehicles, as it provides assurance to drivers, passengers, and pedestrians that the AI system is making safe and reliable decisions.

Conclusion

The demand for explainability of machine learning models is becoming an increasingly critical requirement as the use of AI systems grows more widespread. Accountability and increased trust, along with ethical significance, are all fostered by models that are both transparent and interpretable. In order to make ethical and trustworthy use of AI in a wide variety of real-world applications, researchers and practitioners alike need to keep developing methods that find an ideal balance between the level of model complexity and the ease with which it can be interpreted. The field of machine learning model explainability will continue to advance as a result of ongoing collaborative efforts, which will contribute to the further development of AI technologies in a sustainable manner.

Opinions expressed by DZone contributors are their own.

Comments