Integrating Rate-Limiting and Backpressure Strategies Synergistically To Handle and Alleviate Consumer Lag in Apache Kafka

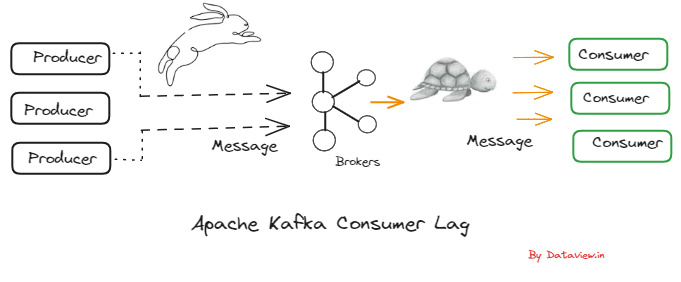

Kafka Consumer Lag refers to the variance between the most recent message within a Kafka topic and the message that has been processed by a consumer. This lag may arise when the consumer struggles to match the pace at which new messages are generated and appended to the topic.

Join the DZone community and get the full member experience.

Join For FreeApache Kafka stands as a robust distributed streaming platform. However, like any system, it is imperative to proficiently oversee and control latency for optimal performance. Kafka Consumer Lag refers to the variance between the most recent message within a Kafka topic and the message that has been processed by a consumer. This lag may arise when the consumer struggles to match the pace at which new messages are generated and appended to the topic. Consumer lag in Kafka may manifest due to various factors. Several typical reasons for consumer lag are.

- Insufficient consumer capacity.

- Slow consumer processing.

- High rate of message production.

Additionally, Complex data transformations, resource-intensive computations, or long-running operations within consumer applications can delay message processing. Poor network connectivity, inadequate hardware resources, or misconfigured Kafka brokers can eventually increase lag too.

In a production environment, it's essential to minimize lag to facilitate real-time or nearly real-time message processing, ensuring that consumers can effectively match the message production rate.

Rate-limiting and backpressure are concepts related to managing and controlling the flow of data within a system, and they play a crucial role in handling Apache Kafka consumer lags. Rate-limiting involves controlling the speed at which data is processed or transmitted to prevent overwhelming a system. In the context of Kafka consumer lags, when consuming messages from a Kafka topic, rate-limiting can be applied to control the rate at which the consumer reads and processes messages. This is important to prevent a consumer from falling behind and experiencing lag.

Backpressure is a mechanism used to handle situations where a downstream component or system is not able to keep up with the rate at which data is being sent to it. It signals to the upstream system to slow down or stop producing data temporarily. In that respect, when a Kafka consumer is experiencing lag, it means it is not able to keep up with the rate at which messages are being produced. Backpressure mechanisms can be implemented to inform the producer (or an intermediate component) to slow down the production of messages until the consumer catches up.

Using Rate-Limiting and Backpressure in Apache Kafka

To implement Rate-Limiting, we can configure the Kafka consumer to limit the rate of message consumption. This can be achieved by adjusting the max. poll.records configuration or by introducing custom throttling mechanisms in the consumer application. There is a pause and resume method in the Kafka API.

Kafka facilitates the dynamic control of consumption flows through the use of pause(Collection) and resume(Collection), enabling the suspension of consumption on specific assigned partitions.

Backpressure is storing incoming records in a queue and processing each one individually at a pace set by the queue's capacity. This can be helpful if we want to make sure that the consumer can process records as they are produced without falling behind or if the rate of message production is steady. We may select to execute enable.auto.commit=false on the consumer and commit only after the completion of the consumer operation to avoid auto-commit. This may slow down the consumer, but it allows Kafka to keep track of the number of messages processed by the consumer. We can also improve the process by setting the poll interval max.poll.interval.ms and the number of messages to be consumed in each poll using max.poll.records. Besides, we can consider using external tools or frameworks that support backpressure, such as Apache Flink.

Various third-party monitoring tools and user interfaces offer an intuitive platform for visualizing Kafka lag metrics. Options include Burrow, Grafana for ongoing monitoring, or a local Kafka UI connected to our production Kafka instance.

Thank you for reading the article. If you found this content valuable, please consider liking and sharing your thoughts below.

Published at DZone with permission of Gautam Goswami, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments