The State of Data Streaming for Healthcare With Kafka and Flink

Data Streaming with Apache Kafka and Apache Flink enables IT modernization and innovation at healthcare companies like Humana, BHG, and Recursion.

Join the DZone community and get the full member experience.

Join For FreeThis blog post explores the state of data streaming for the healthcare industry in 2023. The digital disruption combined with growing regulatory requirements and IT modernization efforts require a reliable data infrastructure, real-time end-to-end observability, fast time-to-market for new features, and integration with pioneering technologies like sensors, telemedicine, or AI/machine learning. Data streaming allows integrating and correlating legacy and modern interfaces in real-time at any scale to improve most business processes in the healthcare sector much more cost-efficiently.

I look at trends in the healthcare industry to explore how data streaming helps as a business enabler, including customer stories from Humana, Recursion, BHG (former Bankers Healthcare Group), Evernorth Health Services, and more. A complete slide deck and on-demand video recording are included.

General Trends in the Healthcare Industry

The digitalization of the healthcare sector and disruptive use cases is exciting. Countries where healthcare is not part of the public administration innovate quickly. However, regulation and data privacy are crucial across the world. Even innovative technologies and cloud services need to comply with the law and, in parallel, connect to legacy platforms and protocols.

Regulation and Interoperability

Healthcare does often not have a choice. Regulations by the government must be implemented by a specific deadline. IT modernization, adoption of new technologies, and integration with the legacy world are mandatory. Many regulations demand Open APIs and interfaces. But even if not enforced, the public sector does itself a favor by adopting open technologies for data sharing between different sectors and the members.

A concrete example is the Interoperability and Patient Access final rule (CMS-9115-F), as explained by the US government, "aims to put patients first, giving them access to their health information when they need it most and, in a way, they can best use it.

- Interoperability: The ability of two or more systems to exchange health information and use the information once it is received.

- Patient access: The ability of consumers to access their health care records.

Lack of seamless data exchange in healthcare has historically detracted from patient care, leading to poor health outcomes and higher costs. The CMS Interoperability and Patient Access final rule establishes policies that break down barriers in the nation’s health system to enable better patient access to their health information, improve interoperability, and unleash innovation while reducing the burden on payers and providers.

Patients and their healthcare providers will be more informed, which can lead to better care and improved patient outcomes while reducing the burden. In a future where data flows freely and securely between payers, providers, and patients, we can achieve truly coordinated care, improved health outcomes, and reduced costs."

Digital Disruption and Automated Workflows

Gartner has a few interesting insights about the evolution of the healthcare sector. The digital disruption is required to handle revenue reduction and revenue reinvention because of economic pressure, scarce and extensive talent, and supply challenges:

Gartner points out that real-time workflows and automation are critical across the entire health process to enable an optimal experience.

Therefore, data streaming is very helpful in implementing new digitalized healthcare processes.

Data Streaming in the Healthcare Industry

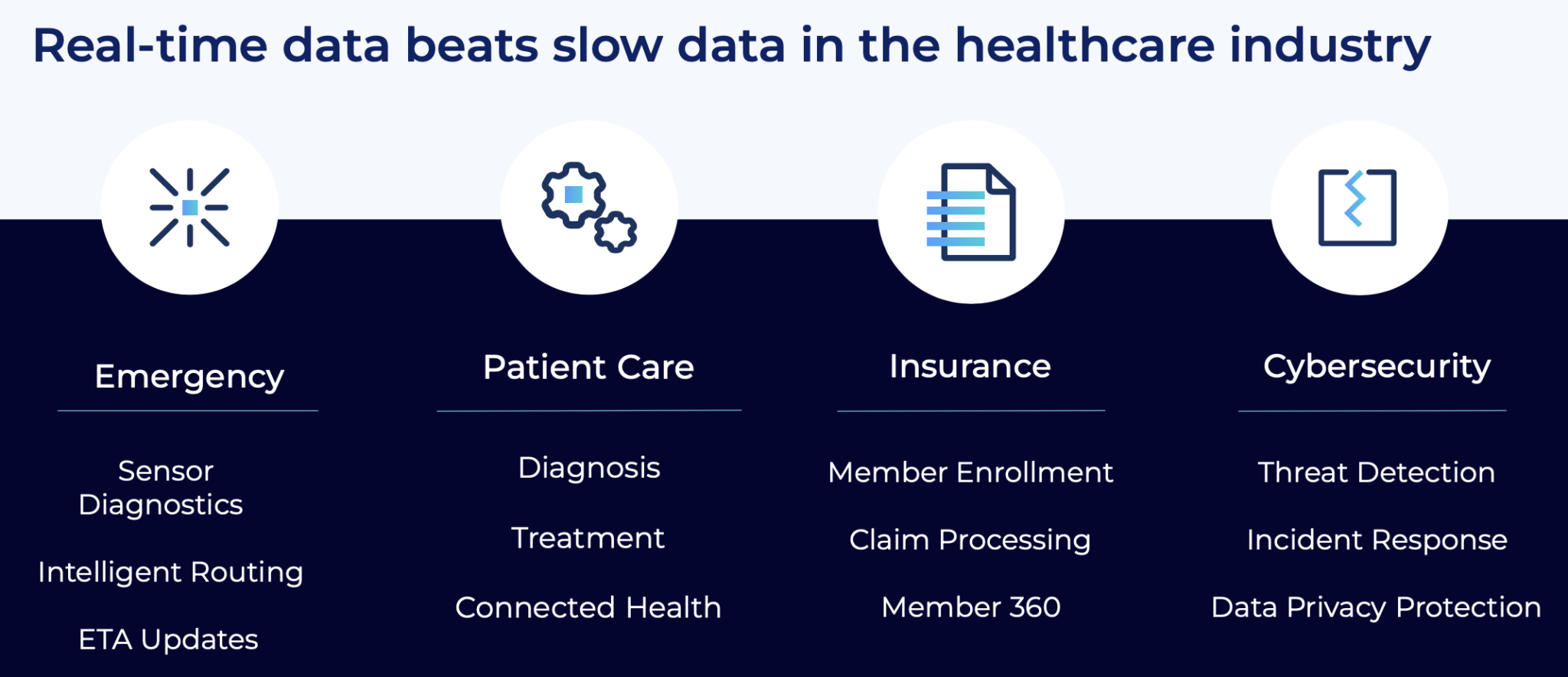

Adopting healthcare trends like telemedicine, automated member service with Generative AI (GenAI), or automated claim processing is only possible if enterprises in the games sector can provide and correlate information at the right time in the proper context. Real-time, which means using the information in milliseconds, seconds, or minutes, is almost always better than processing data later:

Data streaming combines the power of real-time messaging at any scale with storage for true decoupling, data integration, and data correlation capabilities.

The following blog series about data streaming with Apache Kafka in the healthcare industry is a great starting point to learn more about data streaming in the health sector, including a few industry-specific and Kafka-powered case studies:

- Data Streaming Use Cases and Architectures for Healthcare (including Slide Deck)

- Legacy Modernization and Hybrid Cloud (Optum / UnitedHealth Group, Centene, Bayer)

- Streaming ETL (Bayer, Babylon Health)

- Real-time Analytics (Cerner, Celmatix, CDC/Centers for Disease Control and Prevention)

- Machine Learning and Data Science (Recursion, Humana)

- Open API and Omnichannel (Care.com, Invitae)

Architecture Trends for Data Streaming

The healthcare industry applies various software development and enterprise architecture trends for cost, elasticity, security, and latency reasons. The three major topics I see these days at customers are:

- Event-driven architectures (in combination with request-response communication) to enable domain-driven design and flexible technology choices

- Data mesh for building new data products and real-time data sharing with internal platforms and partner APIs

- Fully managed SaaS (whenever doable from compliance and security perspective) to focus on business logic and faster time-to-market

Let's look deeper into some enterprise architectures that leverage data streaming for healthcare use cases.

Event-Driven Architecture for Integration and IT Modernization

IT modernization requires integration between legacy and modern applications. The integration challenges include different protocols (often proprietary and complex), various communication paradigms (asynchronous, request-response, batch), and SLAs (transactions, analytics, reporting).

Here is an example of a data integration workflow combining clinical health data and claims in EDI / EDIFACT format, data from legacy databases, and modern microservices:

One of the biggest problems in IT modernization is data consistency between files, databases, messaging platforms, and APIs. That is a sweet spot for Apache Kafka: Providing data consistency between applications no matter what technology, interface, or API they use.

Data Mesh for Real-Time Data Sharing and Consistency

Data sharing across business units is important for any organization. The healthcare industry has to combine very interesting (different) data sets, like big data game telemetry, monetization and advertisement transactions, and 3rd party interfaces.

Data consistency is one of the most challenging problems in the games sector. Apache Kafka ensures data consistency across all applications and databases, whether these systems operate in real-time, near-real-time, or batch.

Data consistency is one of the most challenging problems in the games sector. Apache Kafka ensures data consistency across all applications and databases, whether these systems operate in real-time, near-real-time, or batch.

One sweet spot of data streaming is that you can easily connect new applications to the existing infrastructure or modernize existing interfaces, like migrating from an on-premise data warehouse to a cloud SaaS offering.

New Customer Stories for Data Streaming in the Healthcare Sector

Innovation is often slower in the healthcare sector. Automation and digitalization change how healthcare companies process member data, execute claim processing, integrate payment processors, or create new business models with telemedicine or sensor data in hospitals.

Most healthcare companies use a hybrid cloud approach to improve time-to-market, increase flexibility, and focus on business logic instead of operating all IT infrastructure on premises. The integration between legacy protocols like EDIFACT and modern applications is still one of the toughest challenges.

Here are a few customer stories from healthcare organizations for IT modernization and innovation with new technologies:

- BHG Financial (formerly Bankers Healthcare Group): Direct lender for healthcare professionals offering loans, credit cards, insurance

- Evernorth Health Services: Hybrid integration between on-premise mainframe and microservices on AWS cloud

- Humana: Data integration and analytics at the point of care

- Recursion: Accelerating drug discovery with a hybrid machine-learning architecture

Resources To Learn More

This blog post is just the starting point. Learn more about data streaming with Apache Kafka and Apache Flink in the healthcare industry in the following on-demand webinar recording, the related slide deck, and further resources, including pretty cool lightboard videos about use cases.

On-Demand Video Recording

The video recording explores the healthcare industry's trends and architectures for data streaming. The primary focus is the data streaming architectures and case studies.

I am excited to have presented this webinar in my interactive light board studio:

This creates a much better experience, especially in a time after the pandemic, where many people are "Zoom fatigue."

Check out our on-demand recording:

Slides

If you prefer learning from slides, check out the deck used for the above recording.

Case Studies and Lightboard Videos for Data Streaming in the Healthcare Industry

The state of data streaming for healthcare in 2023 is interesting. IT modernization is the most important initiative across most healthcare companies and organizations. This includes cost reduction by migrating from legacy infrastructure like the mainframe, hybrid cloud architectures with bi-directional data sharing, and innovative new use cases like telehealth.

We recorded lightboard videos showing the value of data streaming simply and effectively. These five-minute videos explore the business value of data streaming, related architectures, and customer stories. Here is an example of cost reduction through mainframe offloading.

Healthcare is just one of many industries that leverages data streaming with Apache Kafka and Apache Flink.. Every month, we talk about the status of data streaming in a different industry. Manufacturing was the first. Financial services second, then retail, telcos, gaming, and so on... Check out my other blog posts.

How do you modernize IT infrastructure in the healthcare sector? Do you already leverage data streaming with Apache Kafka and Apache Flink? Maybe even in the cloud as a serverless offering? Let’s connect on LinkedIn and discuss it! Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter.

Published at DZone with permission of Kai Wähner, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments