Understanding the CAP Theorem

In this article, we take an exploratory look at one of the more important ideas in the field of data engineering, and where it stands today.

Join the DZone community and get the full member experience.

Join For Freethe cap theorem is a tool used to makes system designers aware of the trade-offs while designing networked shared-data systems. cap has influenced the design of many distributed data systems. it made designers aware of a wide range of tradeoffs to consider while designing distributed data systems. over the years, the cap theorem has been a widely misunderstood tool used to categorize databases. there is much misinformation floating around about cap. most blog posts on cap are historical and possibly incorrect.

it is important to understand cap so that you can identify the misinformation around it.

the cap theorem applies to distributed systems that store state. eric brewer, at the 2000 symposium on principles of distributed computing (podc), conjectured that in any networked shared-data system there is a fundamental trade-off between consistency, availability, and partition tolerance. in 2002, seth gilbert and nancy lynch of mit published a formal proof of brewer's conjecture. the theorem states that networked shared-data systems can only guarantee/strongly support two of the following three proper ties:

- consistency — a guarantee that every node in a distributed cluster returns the same, most recent, successful write. consistency refers to every client having the same view of the data. there are various types of consistency models. consistency in cap (used to prove the theorem) refers to linearizability or sequential consistency, a very strong form of consistency.

- availability — every non-failing node returns a response for all read and write requests in a reasonable amount of time. the key word here is every. to be available, every node on (either side of a network partition) must be able to respond in a reasonable amount of time.

- partition tolerant — the system continues to function and upholds its consistency guarantees in spite of network partitions. network partitions are a fact of life. distributed systems guaranteeing partition tolerance can gracefully recover from partitions once the partition heals.

the c and a in acid represent different concepts than c and a in the cap theorem.

the cap theorem categorizes systems into three categories:

- cp (consistent and partition tolerant) — at first glance, the cp category is confusing, i.e., a system that is consistent and partition tolerant but never available. cp is referring to a category of systems where availability is sacrificed only in the case of a network partition.

- ca (consistent and available) — ca systems are consistent and available systems in the absence of any network partition. often a single node's db servers are categorized as ca systems. single node db servers do not need to deal with partition tolerance and are thus considered ca systems. the only hole in this theory is that single node db systems are not a network of shared data systems and thus do not fall under the preview of cap. [^11]

- ap (available and partition tolerant) — these are systems that are available and partition tolerant but cannot guarantee consistency.

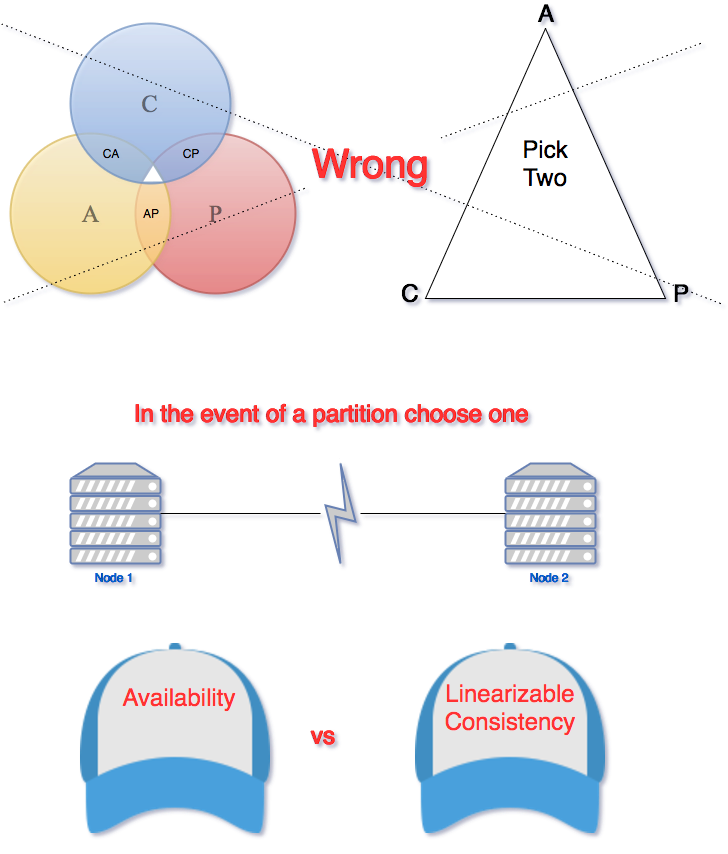

a venn diagram or a triangle is frequently used to visualize the cap theorem. systems fall into the three categories that depicted using the intersecting circles.

the part where all three sections intersect is white because it is impossible to have all three properties in networked shared-data systems. a ven n diagram or a triangle is an incorrect visualization of the cap. any cap theorem visualization such as a triangle or a venn diagram is misleading. the correct way to think about cap is that in case of a network partition (a rare occurrence) one needs to choose between availability and consistency .

in any networked shared-data systems partition tolerance is a must. network partitions and dropped messages are a fact of life and must be handled appropriately. consequently, system designers must choose between consistency and availability. simplistically speaking, a network partition forces designers to either choose perfect consistency or perfect availability. picking consistency means not being able to answer a client's query as the system cannot guarantee to return the most recent write. this sacrifices availability.

network partition forces nonfailing nodes to reject clients' requests as these nodes cannot guarantee consistent data. at the opposite end of the spectrum, being available means being able to respond to a client's request but the system cannot guarantee consistency, i.e., the most recent value written. available systems provide the best possible answer under the given circumstance.

during normal operation (lack of network partition) the cap theorem does not impose constraints on availability or consistency.

the cap theorem is responsible for instigating the discussion about the various tradeoffs in a distributed shared data system. it has played a pivotal role in increasing our understanding of shared data systems. nonetheless, the cap theorem is criticized for being too simplistic and often misleading. over a decade after the release of the cap theorem, brewer acknowledges that the cap theorem oversimplified the choices available in the event of a network partition.

according to brewer, the cap theorem prohibits only a “tiny part of the design space: perfect availability and consistency in the presence of partitions, which are rare." system designers have a broad range of options for dealing and recovering from network partitions. the goal of every system must be to “maximize combinations of consistency and availability that make sense for the specific application.”

references:

Published at DZone with permission of Akhil Mehra. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments