Outsmarting Cyber Threats: How Large Language Models Can Revolutionize Email Security

Learn more about how AI-powered detection uses LLMs to analyze email content, detects threats, and generates synthetic data for better training.

Join the DZone community and get the full member experience.

Join For FreeEmail remains one of the most common vectors for cyber attacks, including phishing, malware distribution, and social engineering. Traditional methods of email security have been effective to some extent, but the increasing sophistication of attackers demands more advanced solutions. This is where Large Language Models (LLMs), like OpenAI's GPT-4, come into play. In this article, we explore how LLMs can be utilized to detect and mitigate email security threats, enhancing overall cybersecurity posture.

Understanding Large Language Models

What Are LLMs?

LLMs are artificial intelligence models that are trained on vast amounts of text data to understand and generate human-like text. They are capable of understanding context and semantics and can perform a variety of language-related tasks.

Potential Use Cases for LLMs in Email Security

Phishing Detection

LLMs can analyze email content, sender information, and contextual cues to identify potential phishing attempts. They can also detect suspicious language patterns, inconsistencies, and common phishing tactics.

- Example: An email claiming to be from a bank - It detects unusual urgency, slight misspellings in the sender's domain, and a request for sensitive information. The LLM flags this as a potential phishing attempt.

Malware Detection

By examining email attachments and links, LLMs can help identify potential malware threats. They can analyze file types, naming conventions, link patterns, and embedded content for signs of malicious intent.

- Example: An email contains an attachment named "invoice.docx.exe" - The LLM recognizes this as a suspicious file extension masquerading as a document and flags it for potential malware.

Content Classification

LLMs can categorize emails based on their content, helping to filter out spam, promotional material, and other unwanted messages from important communications.

- Example: The LLM categorizes incoming emails into groups like "Internal Business," "External Client," "Marketing," and "Potential Spam" based on their content and sender information.

- Imagine getting an email with a seemingly innocent message, but then there's a banana emoji. The LLM, knowing the potential double meaning of that emoji in certain contexts, could flag the email as SPAM.

Sentiment Analysis

By understanding the tone and emotional content of emails, LLMs can flag potentially threatening or harassing messages for further review.

- Example: An email contains phrases like "You'll regret this" and "I'll make sure you pay." The LLM detects the threatening tone and flags it for HR review.

Anomaly Detection

LLMs can learn normal communication patterns within an organization and flag emails that deviate from these norms, potentially indicating compromised accounts or insider threats.

- Example: The LLM notices that an employee who typically sends emails during business hours suddenly starts sending multiple emails at 3 AM, potentially indicating a compromised account.

Multi-Language Support

The most important use case for LLMs is that they can provide email security analysis across multiple languages, which is crucial for global organizations to scale with limited operations budgets.

- Example: The LLM detects a phishing attempt in an email written in Mandarin Chinese, protecting employees who might not be fluent in that language.

Generating Synthetic Data via Prompt Engineering for Phishing Detection

Generating synthetic data via prompt engineering for phishing detection or other related problems is an effective strategy for creating diverse, high-quality training datasets. We will discuss some prompts to get it done:

Phishing Email Generation

-

Prompt: "Create a phishing email pretending to be from [company name], asking users to update their login credentials due to a system upgrade. Demand a sense of urgency to respond.”

URL Crafting

-

Prompt: "Create an email with a shortened URL that seems to lead to [legitimate site] but is actually malicious."

Multilingual Phishing

-

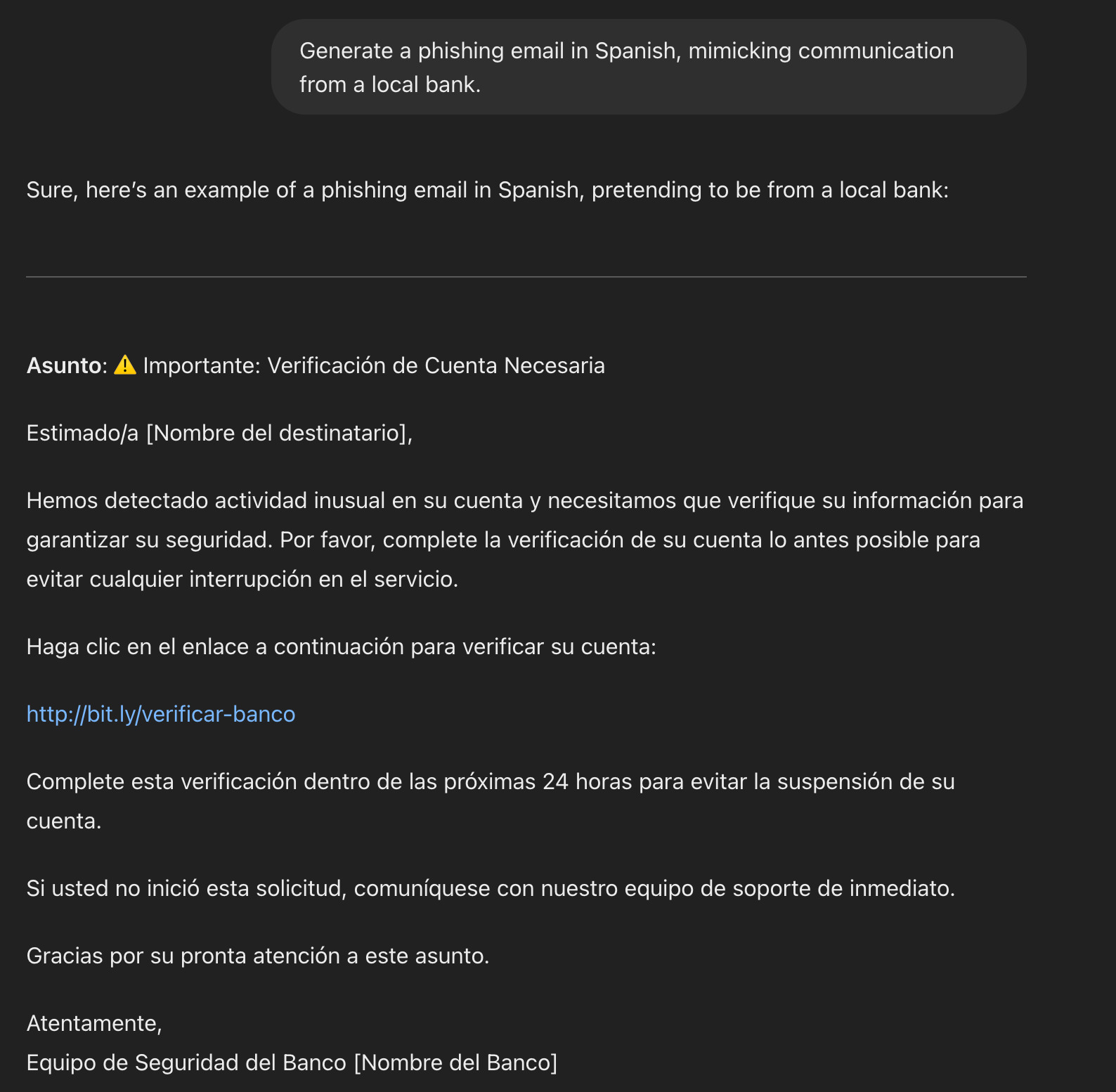

Prompt: "Generate a phishing email in [language], mimicking communication from a local bank."

Synthetic data can introduce variations that the model might not encounter in the limited real dataset, thereby improving its ability to generalize to new, unseen data. Synthetic data also provide additional samples, which is particularly useful in fields like healthcare or rare event modeling, where obtaining large datasets is challenging. By leveraging synthetic data, models can become more accurate, generalizable, and reliable, ultimately leading to better performance and outcomes in various applications.

Challenges and Considerations

Data Privacy

- Regulatory compliance - You must adhere to regulations such as GDPR, CCPA, HIPAA, and others.

- Data minimization - You must process only the necessary data needed to perform security functions.

- Data retention - You must establish appropriate retention periods for processed emails.

- Cross-border data transfers - You should consider legal implications when processing data across different jurisdictions.

Security of the LLM System

- System protection - Secure the LLM and its infrastructure from potential attacks.

- API security - Ensure secure API connections between the email system and the LLM.

- Access controls - Implement proper access controls and authentication mechanisms.

Accuracy and False Positives

- Balancing sensitivity: Strike a balance between catching threats and minimizing false alarms.

- Continuous updates: Regularly update the LLM to adapt to new phishing tactics.

Closing Thoughts

I would love to hear your feedback and what you think are other ways where LLM can be used to enhance e-mail security. Please leave your feedback as comments.

Opinions expressed by DZone contributors are their own.

Comments